At some point in their past, almost every country has witnessed a political revolution, a change of government following a dramatic and sometimes violent expression of discontent. As a result, emperors have been beheaded, kings dethroned, and presidents exiled. Revolutions are often caused by a slowly growing dissatisfaction in the general population, for instance due to lost wars, lack of food, or high taxes. In other words, the general population feels a strong desire for change. At some point, resentment reaches a boiling point and a single event –another tax hike, another arrest by the secret police– is enough to trigger a cascade of violent protests that can culminate in the guillotine.

The dynamics of political revolutions are in some ways similar to the academic revolution that has recently gripped the field of psychology1. Over the last two decades, increasing levels of competition for scarce research funding have created a working environment that rewards productivity over reproducibility; this perverse incentive structure has caused some of the findings in the psychological literature to be spectacular and counter-intuitive, but likely false (Frankfurt, 2005; Ioannidis, 2005). In addition, researchers are free to analyze their data without any plan and without any restrictions, leaving the door wide open for what Theodore Barber termed Investigator Data Analysis Effects: "When not planned beforehand, data analysis can approximate a projective technique, such as the Rorschach, because the investigator can project on the data his own expectancies, desires, or biases and can pull out of the data almost any 'finding' he may desire." (Barber, 1976, p. 20).

The general dissatisfaction with the state of the field was expressed in print only occasionally, until in 2011 two major events ignited the scientific revolution that is still in full force today. The first event was the massive fraud from well-known social psychologist Diederik Stapel, who fabricated data in at least 55 publications. Crucially, Stapel's deceit was not revealed through the scientific process of replication – instead, Stapel was caught because his graduate students became suspicious and started their own investigation. The second event was the fact that social psychologist Daryl Bem managed to publish nine experiments showing that people can look into the future. More importantly, he succeeded to publish this result in a flagship outlet, Journal of Personality and Social Psychology (Bem, 2011). To some researchers, these two events made it clear that when it comes to the publication of academic findings in premier outlets, one can apparently get away with just about anything.

Of course, these landmark events were quickly followed by others. For instance, Simmons et al., (2011) used concrete examples of Barber's Investigator Data Analysis Effects, demonstrating that “undisclosed flexibility in data collection and analysis allows presenting anything as significant”. Moreover, John et al. (2012) reported that many researchers self-report the use of “questionable research practices” such as cherry-picking, post-hoc theorizing, and optional stopping (i.e., testing more participants until the desired level of significance is obtained). In addition, more cases of academic misconduct were detected – among those accused are Lawrence Sanna, Dirk Smeesters, and, most recently, Jens Foerster. Moreover, researchers whose work failed to replicate responded in anger, accusing those who conducted the replications of incompetence or worse (e.g., Yong, 2012). Nobel-prize laureate Daniel Kahneman wrote an open letter expressing his concern and urging the field to conduct more replication studies. However, the majority of replication studies that have been published since Kahneman's open letter failed to replicate the original findings, a general trend corroborated by the contributions for the special issue on replications in the journal Social Psychology (Nosek & Lakens, 2014). As far as replicability is concerned, the proof of the pudding is in the eating, and so far the results have been flat-out disappointing.

These adverse events, as well as many others, created a perfect storm of skepticism that prompted several psychologists to take up the gauntlet and develop a series of concrete initiatives to address the endemic problems that plague not just psychology but beset empirical disciplines throughout academia. Before discussing these initiatives I will briefly present what I personally believe to be the two most important challenges that confront psychological science today.

Footnote

1 The analogy to political revolutions was presented earlier by Bobbie Spellman (e.g., https://morepops.wordpress.com/2013/05/27/research-revolution-2-0-the-current-crisis-how-technology-got-us-into-this-mess-and-how-technology-will-help-us-out/).

The First Fundamental Challenge of Empirical Research: Separating Postdiction from Prediction

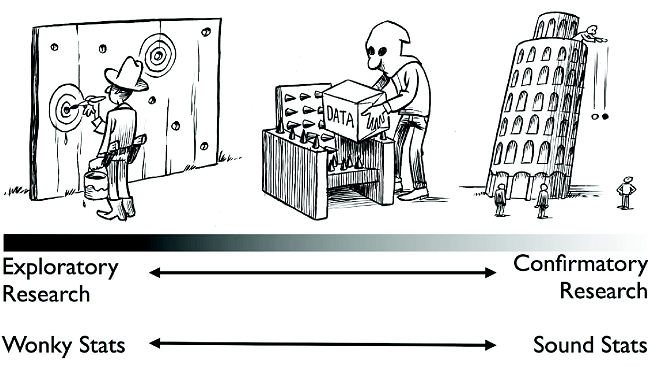

The first fundamental challenge deals with the key distinction between research that is exploratory (i.e., hypothesis-generating) versus confirmatory (i.e., hypothesis-testing). In his book “Methodology”, de Groot (1969, p. 52) states: “It is of the utmost importance at all times to maintain a clear distinction between exploration and hypothesis testing. The scientific significance of results will to a large extent depend on the question whether the hypotheses involved had indeed been antecedently formulated, and could therefore be tested against genuinely new materials. Alternatively, they would, entirely or in part, have to be designated as ad hoc hypotheses (...)”. Similarly, Barber (1976, p. 20) warns us that: "When the investigator has not planned the data analysis beforehand, he may find it difficult to avoid the pitfall of focusing only on the data which look promising (or which meet his expectations or desires) while neglecting data which do not seem 'right' (which are incongruous with his assumptions, desires, or expectations)."

The core of the problem is that hypothesis testing requires predictions; as the word implies, these are statements about reality that do not have the benefit of hindsight. Include the benefit of hindsight, however, and predictions turn in to postdictions. Postdictions are often remarkably accurate, but their accuracy is hardly surprising. Figure 1 illustrates the continuum from exploratory to confirmatory research and highlights the need to cleanly separate the two. Note that the Texas sharpshooter (Figure 1, left end of continuum) appears to have remarkably good aim, but only because he uses postdiction instead of prediction. True prediction requires complete transparency, including a preregistered plan of analysis (Figure1, right end of continuum, where a clear prediction is tested under public scrunity). Most research today uses an unknown mix of prediction and postdiction. As Figure 1 highlights, statistical methods for hypothesis testing are only valid for research that is strictly confirmatory (e.g., de Groot, 1956/2014; Goldacre, 2008).

It should be stressed that the problem is not with exploratory research itself. Rather, the problem is with dishonesty, that is, pretending that exploratory research was instead confirmatory.

The Second Fundamental Challenge of Empirical Research: The Violent Bias of the P-Value

Across the empirical sciences, researcher rely on the infamous p-value to discrimate the signal from the noise. The p-value quantifies the extremeness or unusualness of the obtained results, given that the null hypothesis is true and no difference is present. Commonly, researchers "reject the null hypothesis" when p<.05, that is, when chance alone is deemed an insufficient explanation for the results.

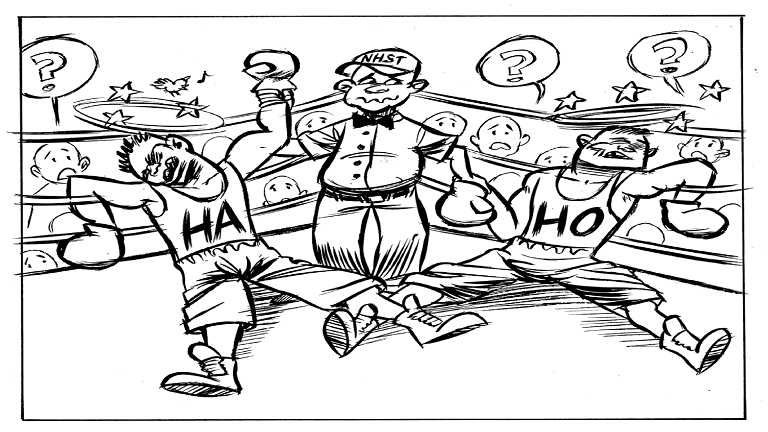

Over the course of decades, statisticians and philosophers of science have repeatedly pointed out that p-values overestimate the evidence against the null hypothesis. That is, p-values suggests that effects are present when the data only offer limited or no support for such a claim (Nuzzo, 2014, and references therein). Ward Edwards (1965, p. 400) stated that "Classical significance tests are violently biased against the null hypothesis". It comes as no surprise that replicability suffers when the most popular method for testing hypotheses is violently biased against the null hypothesis.

A mathematical analysis of why p-values are violently biased against the null hypothesis takes us too far afield, but the intuition is as follows: the p-value only considers the extremeness of the data under the null hypothesis of no difference, but it ignores the extremeness of the data under the alternative hypothesis. Hence, data can be extreme or unusual under the null hypothesis, but if these data are even more extreme under the alternative hypothesis then it is nevertheless imprudent to "reject the null". As argued by Berkson (1938, p. 531) : "My view is that there is never any valid reason for rejection of the null hypothesis except on the willingness to embrace an alternative one. No matter how rare an experience is under a null hypothesis, this does not warrant logically, and in practice we do not allow it, to reject the null hypothesis if, for any reasons, no alternative hypothesis is credible."

This situation is illustrated in Figure 2. The lesson is that evidence is a concept that is relative rather than absolute; when we wish to grade the decisiveness of the evidence provided by the data, this requires that we consider both a null hypothesis of no difference as well as a properly specified alternative hypothesis. By only focusing on one side of the coin the p-value biases the conclusion away from the null hypothesis, effectively encouraging the pollution of the field with results whose level of evidence is not worth more than a bare mention (e.g., Wetzels et al., 2011).

The Future of the Revolution: A Positive Outlook

In short order, the shock effect from well-documented cases of fraud, fudging, and non-replicability has created an entire movement of psychologists who seek to improve the openness and reproducibility of findings in psychology (e.g., Pashler & Wagenmakers, 2012). Special mention is due to the Center for Open Science (http://centerforopenscience.org/); spearheaded by Brian Nosek, this center develops initiatives to promote openness, data sharing, and preregistration. Another initiative is http://psychfiledrawer.org/, a repository where researchers can upload results that would otherwise have been forgotten. In addition, Chris Chambers has argued for the importance of distinguishing between prediction and postdiction by means of Registered Reports; these reports are based on a two-step review process that includes, in the first step, review of a registered analysis plan prior to data collection. This plan identifies, in advance, what hypotheses will be tested and how (Chambers, 2013; see also https://osf.io/8mpji/wiki/home/). Thanks in large part to his efforts, many journals have now embraced this new format. With respect to the violent bias of p-values, Jeff Rouder, Richard Morey, and colleagues have developed a series of Bayesian hypothesis tests that allow a more honest assessment of the evidence that the data provide for and against the null hypothesis (Rouder et al., 2012; see also www.jasp-stats.org). The reproducibility frenzy also includes the development of new methodological tools; proposals for different standards for reporting, analysis, and data sharing; encouragement to share data by default (e.g., http://agendaforopenresearch.org/); and a push toward experiments with high power.

Although some researchers are less enthusiastic about the "replicability movement" than others, it is my prediction that the movement will grow until its impact is felt in other empirical disciplines including the neurosciences, biology, economy, and medicine. The problems that confront psychology are in no way unique, and this affords an opportunity to lead the way and create dependable guidelines on how to do research well. Such guidelines have tremendous value, both to individual scientists and to society as a whole.

References

Barber, T. X. (1976). Pitfalls in human research: Ten pivotal points. New York: Pergamon Press Inc.

Bem, D. J. (2011). Feeling the future: Experimental evidence for anomalous retroactive influences on cognition and affect. Journal of Personality and Social Psychology, 100, 407-425.

Chambers, C. D. (2013). Registered reports: A new publishing initiative at Cortex. Cortex, 49, 609-610.

De Groot, A. D. (1969). Methodology: Foundations of inference and research in the behavioral sciences. The Hague: Mouton.

De Groot, A. D. (1956/2014). The meaning of “significance” for different types of research. Translated and annotated by Eric-Jan Wagenmakers, Denny Borsboom, Josine Verhagen, Rogier Kievit, Marjan Bakker, Angelique Cramer, Dora Matzke, Don Mellenbergh, and Han L. J. van der Maas. Acta Psychologica, 148, 188-194.

Edwards, W. (1965). Tactical note on the relation between scientific and statistical hypotheses. Psychological Bulletin, 63, 400-402.

Frankfurt, H. G. (2005). On bullshit. Princeton: Princeton University Press.

Goldacre, B. (2008). Bad science. London: Fourth Estate.

Ioannidis, J. P. A. (2005). Why most published research findings are false. PLoS Medicine, 2, 696-701.

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth-telling. Psychological Science, 23, 524-532.

Nosek, B. A., & Lakens, D. (2014). Registered reports: A method to increase the credibility of published results. Social Psychology, 45,137-141.

Nuzzo, R. (2014). Statistical errors. Nature, 506, 150-152.

Pashler, H., & Wagenmakers, E.-J. (2012) Editors' introduction to the special section on replicability in psychological science: A crisis of confidence? Perspectives on Psychological Science, 7, 528-530.

Rouder, J. N., Morey, R. D., Speckman, P. L., & Province, J. M. (2012). Default Bayes factors for ANOVA designs. Journal of Mathematical Psychology, 56, 356-374

Simmons, J. P., Nelson, L. D., & Simonsohn, U. (2011). False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant. Psychological Science, 22, 1359-1366.

Wagenmakers, E.-J., Verhagen, A. J., Ly, A., Matzke, D., Steingroever, H., Rouder, J. N., & Morey, R. D. (in press). The need for Bayesian hypothesis testing in psychological science. In Lilienfeld, S. O., & Waldman, I. (Eds.), Psychological Science Under Scrutiny: Recent Challenges and Proposed Solutions. John Wiley and Sons.

Wetzels, R., Matzke, D., Lee, M. D., Rouder, J. N., Iverson, G. J., & Wagenmakers, E.-J. (2011). Statistical evidence in experimental psychology: An empirical comparison using 855 t tests. Perspectives on Psychological Science, 6, 291-298.

Wagenmakers, E.-J., Wetzels, R., Borsboom, D., van der Maas, H. L. J. & Kievit, R. A. (2012). An agenda for purely confirmatory research. Perspectives on Psychological Science, 7, 627-633.

Yong, E. (2012). Bad copy. Nature, 485, 298-300.